Drowning in data chaos? Azure Data Factory is the bridge to clarity

Azure Data Factory: Transforming Big Data into Business Insights

A product with several applications will generate data exponentially day by day (Big Data).

As the data comes from various sources, it becomes even more difficult to manage.

Raw data on its own does not provide any context, meaning, or insights to analysts, data scientists, or business decision-makers.

To transform these massive repositories of unprocessed data into useful business insights, big data needs a service that can coordinate and operationalize procedures.

This is where our hero comes in — Azure Data Factory.

What is Azure Data Factory?

Azure Data Factory is a managed cloud service built for:

- Storing data

- Analyzing data

- Transforming data

- Publishing organized data

- Visualizing data

Features of Azure Data Factory

1. Data Compression

- Copying huge amounts of data and writing it to the target source is a major concern when working with big data.

- Azure Data Factory compresses the data while copying and writes it to the target file, optimizing bandwidth usage.

2. Extensive Connectivity Support for Different Data Sources

- Pulling and writing data from various data sources might result in connectivity issues.

- Azure Data Factory provides broad connectivity support for integrating with different data sources.

3. Custom Event Triggers

- You can automate data processing using custom event triggers with Azure Data Factory.

4. Data Preview and Validation

- During the data copy activity, Azure Data Factory provides tools for previewing and validating data.

5. Customizable Data Flows

- You can create customizable data flows with Azure Data Factory, allowing you to add custom actions or steps for data processing.

6. Integrated Security

- Azure Data Factory offers integrated security features such as Entra ID integration and role-based access control to manage access to data flows.

- This enhances security in data processing and protects your data.

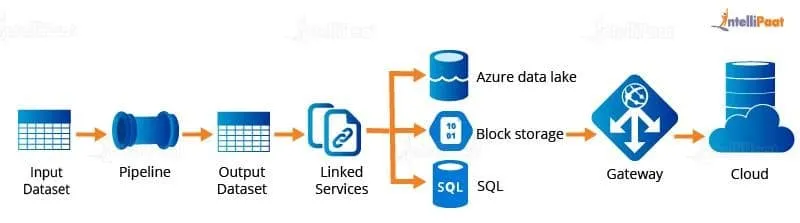

Flow Process of Azure Data Factory

The Data Factory service allows us to create pipelines that help move and transform data.

These pipelines can run on a specified schedule or as a one-time process.

Flow Components:

- Source: Data within our data store that needs to be processed and passed through a pipeline.

- Input Dataset: The data that enters the pipeline for processing.

- Pipeline: The stage where operations are performed to transform data.

- Output Dataset: The structured data generated from the pipeline.

- Linked Services: Stores essential information for connecting with external services. For example, details about sending data to an SQL server.

- Gateway: Connects on-premises data to the cloud.

- Cloud: Where structured data is stored for analysis and visualization.

Companies Using Azure Data Factory

Some of the companies that use Azure Data Factory include:

- Mohawk Industries, Inc. – A U.S.-based company with over 10,000 employees and over $1B in revenue.

- IDFC FIRST Bank – A company with over 10,000 employees located in Mumbai, Maharashtra, India.

- Tech Mahindra – A company with over 10,000 employees located in Pune, Maharashtra, India.

As of 2025, around 7,116 companies were using Azure Data Factory as a data integration tool.

The majority of its customers are in the United States, followed by the United Kingdom and India.

You can also find this post on